A2A vs MCP for AIOps: The New DevOps Frontier for AI Agent Orchestration

How Google's Agent-to-Agent Protocol and Anthropic's Model Context Protocol are reshaping the future of AIOps based automation and orchestration for DevOps teams

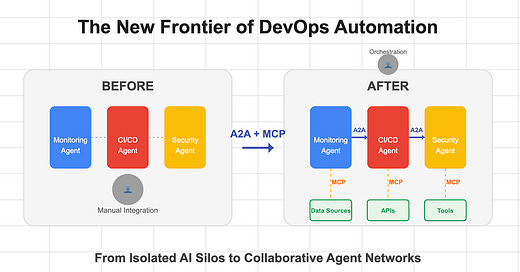

As DevOps engineers, you’re no strangers to automation. We've spent years stitching together scripts, configuring CI/CD pipelines, and building monitoring dashboards to keep systems running smoothly. We've heard countless promises about AI "agents" taking over repetitive tasks and AIOps solving all the problems for us, but until recently, these agents lived in isolated silos—each tied to its own vendor or platform.

That's about to change.

Google's recently announced Agent-to-Agent (A2A) protocol and Anthropic's Model Context Protocol (MCP) are poised to transform how AI agents operate in our environments. These aren't just incremental improvements to existing tools; they represent an entirely new paradigm for AI-driven automation and AIOps.

In this deep dive, I'll explore what A2A and MCP are, how they work, how they compare, and most importantly—what this means for DevOps teams planning to implement Real AIOps (not vendor pushed solutions) in the near future.

The Problem: Islands of Automation

Before diving into A2A and MCP, let's understand the problem they're trying to solve.

Today's AI agent landscape resembles the early days of cloud computing: proprietary solutions that don't talk to each other. You might have an AI agent monitoring your infrastructure, another handling customer support tickets, and a third optimizing your CI/CD pipeline—but they operate independently, with no ability to coordinate their efforts.

This fragmentation limits the potential of AI agents in enterprise environments. When agents can't communicate, you end up with:

Manual handoffs between systems

Duplicate data and functionality

Brittle integrations that break easily

Limited automation of complex workflows

Sound familiar? These are exactly the pain points that drove the adoption of microservices, APIs, and service meshes. Now, we're seeing similar patterns with AI agent communication.

Google's Agent-to-Agent (A2A) Protocol: The Basics

Google introduced A2A last week as an open protocol that enables autonomous AI agents to communicate, coordinate tasks, and exchange information across applications and vendors. With support from over 50 technology partners—including Atlassian, Salesforce, Deloitte, and Accenture—A2A aims to become the standard language for agent collaboration.

What is A2A?

In simple terms, A2A is an API for agents. Just as microservices use APIs to talk to each other, autonomous agents use A2A to send messages and requests between themselves.

The protocol provides:

Standardized messaging between agents

Task lifecycle management (submitted, working, completed, failed, etc.)

Capability discovery through Agent Cards

Secure authentication between agents

Real-time updates via Server-Sent Events

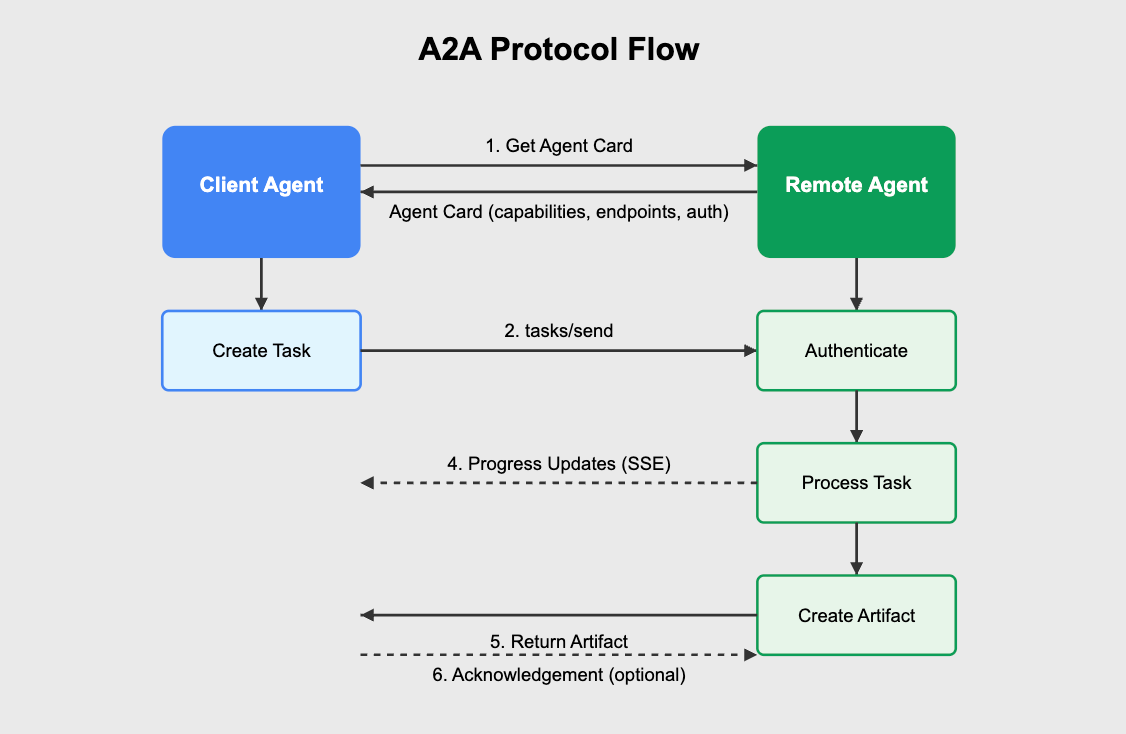

How A2A Works

At its core, A2A defines a client-server communication model between agents. In any given interaction:

A client agent initiates a task (asking for something to be done)

A remote agent processes the task and produces a result

These roles are dynamic—an agent might be a client in one interaction and a remote agent in another.

The protocol is built on familiar web standards: HTTP calls with JSON payloads, plus Server-Sent Events for streaming updates. This means it integrates easily with existing infrastructure and security systems.

Let's look at the core components of A2A:

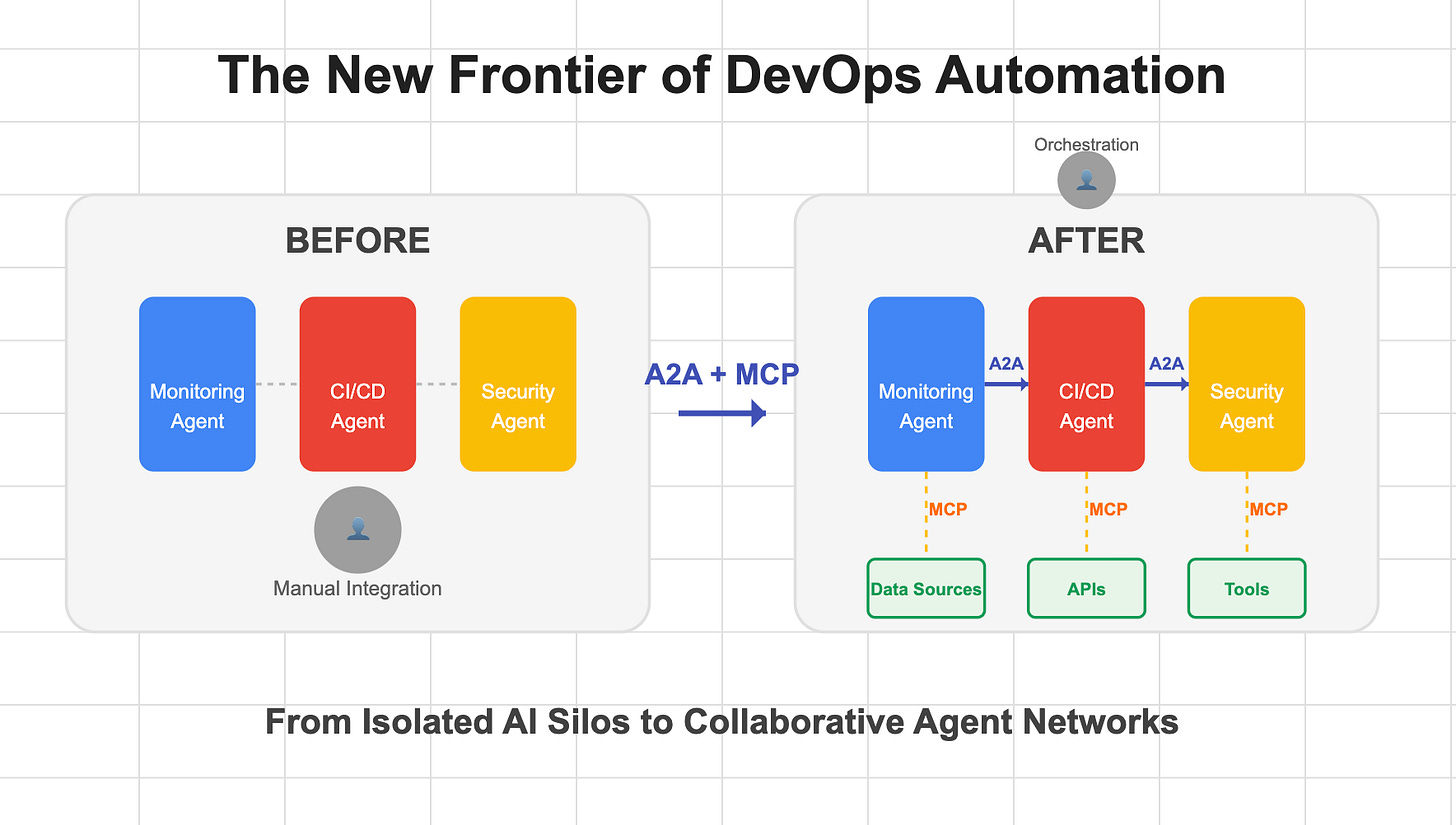

Agent Cards

For agents to discover each other's capabilities, A2A uses Agent Cards. An agent exposes a small JSON file (typically at a standard URL like https://agent-domain/.well-known/agent.json) that describes:

What the agent can do (its skills)

How to connect to it (endpoints)

What authentication it requires

This is conceptually similar to OpenAPI specifications or service registries, allowing agents to dynamically discover other agents' capabilities.

Tasks and Artifacts

The basic unit of work in A2A is a Task. When a client agent needs something done, it creates a task (with a unique ID) and sends it to a remote agent. Tasks have defined lifecycle states:

submitted

working

input-required

completed

failed

canceled

The result of a completed task is an Artifact—the deliverable from the task, which could be text, a file, or structured data.

Messaging and Parts

Agents communicate through messages containing content and context related to tasks. A2A supports rich message content via Parts, which can include:

Text snippets

Files or images

Structured JSON data

This modular design allows agents to exchange complex information, not just plain text.

Real-time Communication

For long-running tasks, A2A supports asynchronous progress updates through:

Server-Sent Events (SSE) for streaming updates

Push notifications to callback URLs

This means client agents can receive real-time feedback without constant polling.

A2A in Action: A Technical Diagram

In this diagram:

Agent Discovery: The client agent locates the remote agent's Agent Card to learn its capabilities

Task Initiation: The client sends a task to the remote agent

Authentication: The remote agent validates the client's credentials

Processing: The remote agent works on the task, potentially sending progress updates

Completion: The remote agent marks the task as completed and returns artifacts

Feedback: The client acknowledges receipt and may provide feedback

You could get started with your journey from DevOps to AI/MLOps by enrolling into our AI Platform Engineer (MLOps) Minidegree programs

Anthropic's Model Context Protocol (MCP): The Complementary Standard

While A2A focuses on agent-to-agent communication, Anthropic's Model Context Protocol (MCP) tackles a different challenge: how AI models connect to external data and tools.

What is MCP?

Announced in late 2024, MCP is an open protocol that standardizes how AI models access data sources, tools, and context. Anthropic describes it as "a USB-C port for AI applications," providing a universal way to plug models into various databases, knowledge bases, or services.

MCP defines standardized "operations" to retrieve or modify data, allowing AI models to access information they wouldn't otherwise have.

How MCP Works

MCP follows a client-server architecture:

MCP clients are typically AI agents or host applications

MCP servers represent data sources or tools

An MCP client maintains connections to one or many MCP servers to fetch context or invoke operations. For example, one MCP server might expose Google Drive files, another a database, and another a third-party API—the agent can query all through a consistent interface.

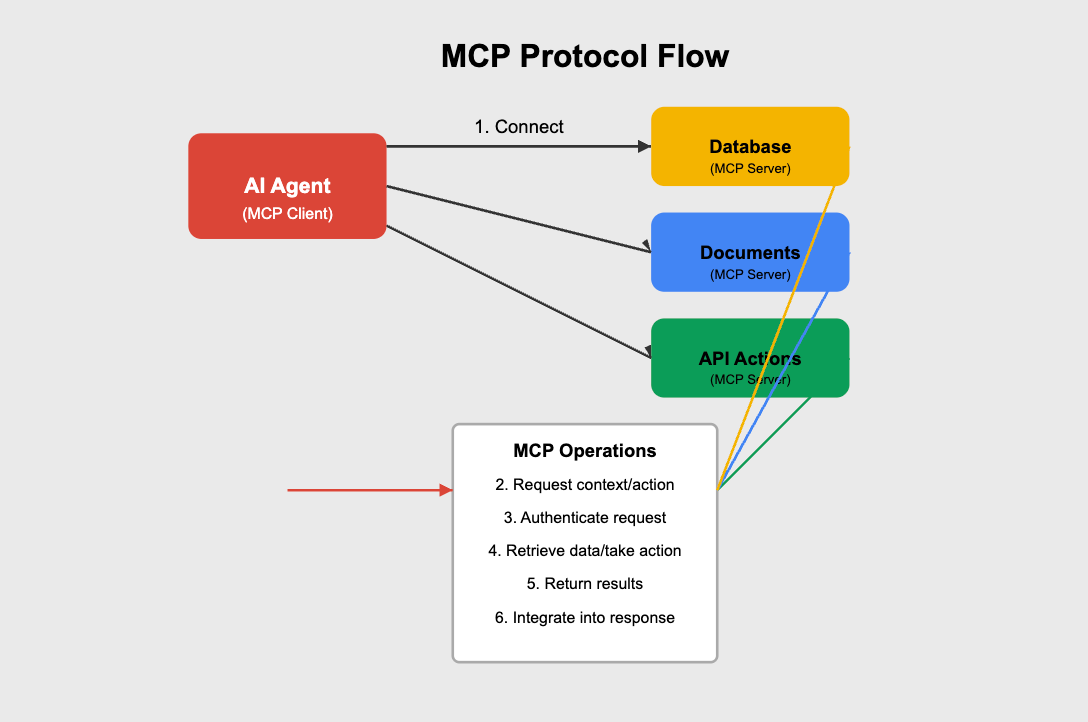

MCP in Action: A Technical Diagram

In this diagram:

Connection: The AI agent (MCP client) connects to various MCP servers

Context Request: The agent requests specific information or tool access

Authentication: The MCP server validates the request

Data Retrieval/Action: The server fetches the requested data or performs an action

Response: The server returns the results to the agent

Integration: The agent incorporates the information into its reasoning or response

A2A vs. MCP: Key Differences and Complementary Roles

While both protocols aim to make AI agents more powerful, they address different aspects of the agent ecosystem:

Scope & Purpose

A2A focuses on agent-to-agent communication—enabling external interactions between autonomous agents

MCP focuses on agent-to-tool/data connectivity—providing a standardized interface for an AI model to access external resources

Use Cases

A2A shines in multi-agent workflows where different specialized agents collaborate on tasks

MCP excels at enriching a single agent with data and actions from various sources

Communication Model

A2A implements a distributed, peer-to-peer model over HTTP, with agents discovering each other through Agent Cards

MCP follows a traditional hub-and-spoke client-server architecture, with AI models connecting to various data sources and tools

Tasks vs. Tools

A2A is task-oriented, concerned with how agents handle jobs and exchange answers

MCP is tool/data-oriented, focused on giving an AI model information or the ability to act on external systems

Combined Power: A2A + MCP

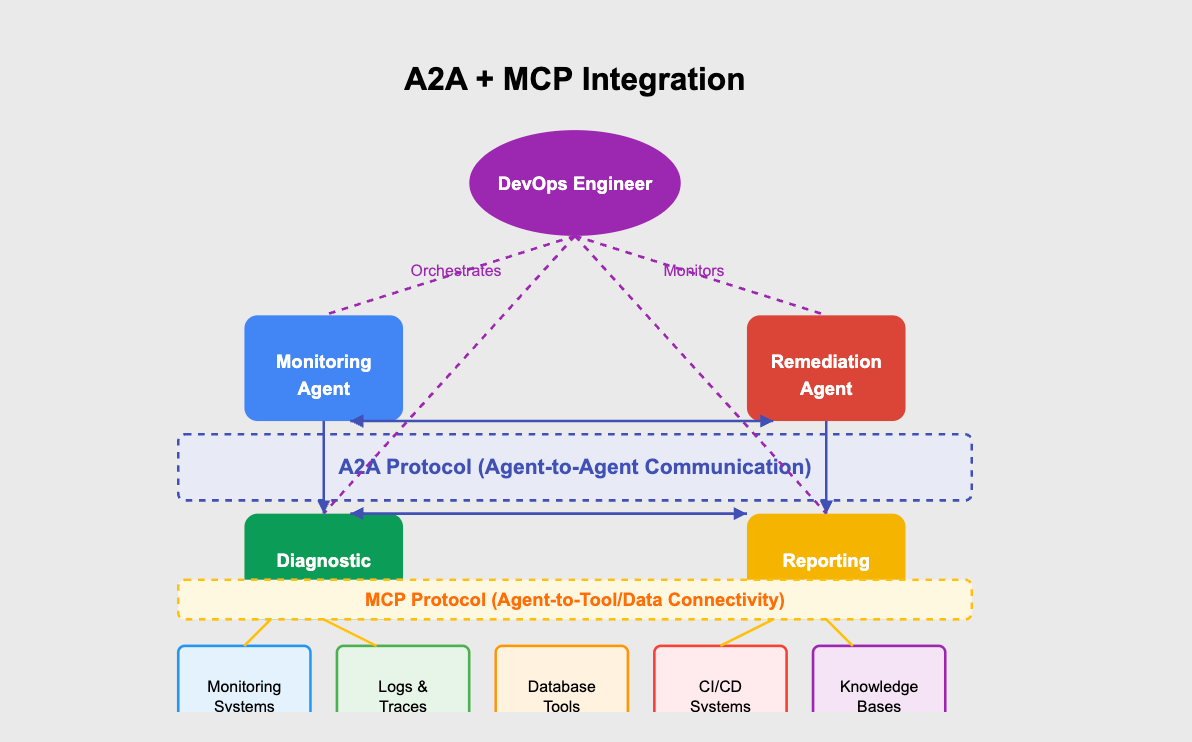

These protocols aren't competitors—they're complementary layers that can work together in a comprehensive AIOps strategy:

MCP feeds an agent with context and actions (vertical integration with tools)

A2A coordinates tasks horizontally across multiple agents or services

In this diagram:

Individual Agents use MCP to connect to their specific data sources and tools

A2A Protocol enables these agents to coordinate complex tasks between themselves

DevOps Engineer oversees the entire system, defining policies and monitoring outcomes

Implications for DevOps Teams

What does all this mean for those of us in DevOps? Here are the key impacts and opportunities:

1. Advanced Automation and Workflow Orchestration

A2A enables modular, specialized agents to coordinate complex workflows. Instead of one monolithic automation system, you can have:

A CI/CD agent specializing in deployments

A monitoring agent detecting anomalies

A security agent enforcing compliance

A diagnostic agent troubleshooting issues

Each handles its domain of expertise, and they collaborate via A2A. Need a new capability? Add a new agent to the network without disrupting existing workflows.

For example, an incident response could look like this:

Monitoring Agent: [detects anomaly in production metrics]

|

v

A2A: Task "investigate-cpu-spike-231" created

|

v

Diagnostic Agent: [pulls logs, metrics via MCP]

[identifies root cause as memory leak]

|

v

A2A: Task "remediate-app-server-3" created

|

v

Remediation Agent: [restarts service, adjusts resources via MCP]

[confirms resolution]

This entire workflow happens autonomously in seconds, without human intervention.

2. Enhanced Observability

When agents communicate via standardized protocols, monitoring their activities becomes more straightforward. Since A2A uses HTTP under the hood, you can:

Use existing APM tools to track agent-to-agent calls

Build dashboards showing live agent interactions

Set up distributed tracing across agent workflows

Create audit trails for compliance and debugging

This observability is crucial for AIOps—you need visibility into what your agents are doing, especially as they gain more autonomy.

3. Improved Security and Resilience

A2A and MCP bring structure and security to agent interactions:

Authentication is required for each agent interaction

Authorization can be enforced at the skill level

Redundancy becomes possible with multiple agents offering similar capabilities

Graceful failure handling through clear task states and error reporting

With proper implementation, these protocols actually make agent systems more secure and reliable than ad-hoc integrations.

4. New Roles in Agent Orchestration

As agent ecosystems grow, DevOps teams will develop new skills and responsibilities:

Agent Selection: Evaluating and choosing specialized agents for different tasks

Policy Definition: Setting rules for how agents coordinate and delegate

Agent Deployment: Managing the lifecycle of AI agents in your environment

Orchestration: Building the frameworks that guide multi-agent collaboration

Think of it as moving from infrastructure management to AI agent fleet management.

Practical Steps for DevOps Teams

How should DevOps engineers prepare for this emerging paradigm? Here are some concrete actions:

1. Identify Automation Bottlenecks

Look for areas where:

Manual handoffs slow down processes

Similar tasks are performed repeatedly

Multiple systems need to coordinate but don't integrate well

These are prime candidates for agent-based automation.

2. Map Your Current Agent Landscape

Take inventory of existing AI capabilities in your organization:

Which AI assistants or agents are already deployed?

What are their specific strengths and limitations?

Where are the integration gaps?

3. Start Small with Focused Agent Pilots

Begin with contained use cases:

Automate a single repetitive workflow with two cooperating agents

Use A2A for agent communication

Use MCP for connecting to your existing tools

Monitor performance and gather feedback before expanding.

4. Build for Observability from Day One

Ensure you can answer these questions about your agent ecosystem:

Which agents are communicating with each other?

What tasks are being delegated and completed?

Where are bottlenecks or failures occurring?

Instrument your A2A communications with logging and tracing from the start.

5. Define Clear Agent Boundaries and Responsibilities

Create a clear taxonomy of:

What each agent is responsible for

Which tasks should be delegated vs. handled internally

What authorization levels different agents have

Document these boundaries to prevent confusion and security issues.

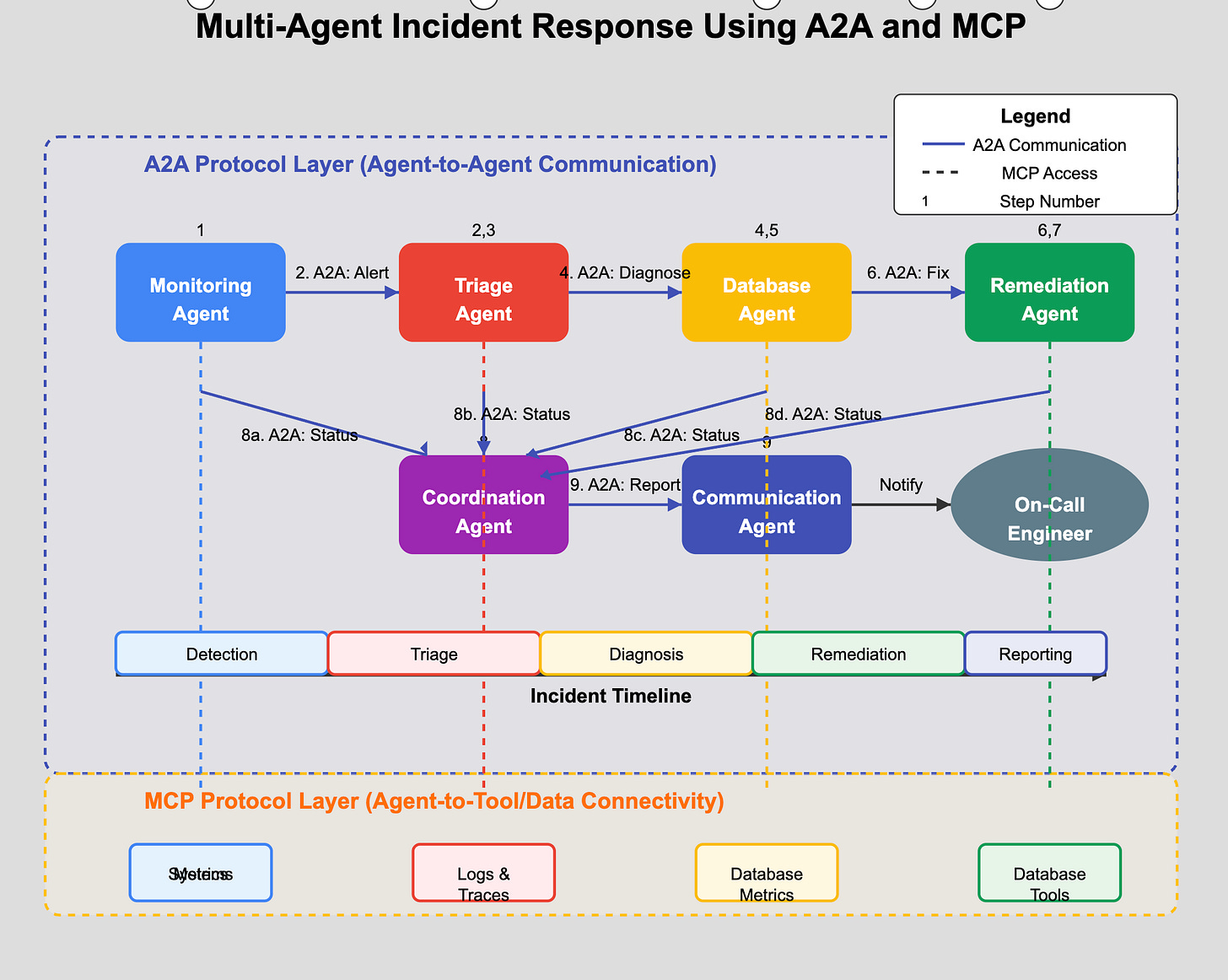

A Real AIOps Scenario: Multi-Agent Incident Response

To make this concrete, let's walk through how a multi-agent system using A2A and MCP might handle a production incident:

Monitoring Agent (using MCP to access metrics systems) detects a latency spike

Triage Agent is invoked via A2A to assess severity and impact

Triage Agent uses MCP to access logs, tracing data, and customer impact metrics

Triage Agent uses A2A to delegate diagnostics to a specialized Database Agent

Database Agent (using MCP to access database metrics) identifies slow queries

Database Agent uses A2A to request optimization from a Remediation Agent

Remediation Agent (using MCP to access database tools) applies index changes

Coordination Agent uses A2A to receive updates from all agents and compile a report

Communication Agent notifies on-call engineer with summary and actions taken

Each agent specializes in a specific aspect of incident response, and A2A enables them to coordinate efficiently. MCP allows each agent to access exactly the tools and data it needs for its specialized role.

Conclusion: Preparing for the Agent-Orchestrated Future

The emergence of A2A and MCP signals a shift from static pipelines to dynamic agent ecosystems. For DevOps teams, this presents an opportunity to elevate automation to a new level of sophistication and autonomy.

These protocols solve different but complementary problems:

A2A creates a common language for agents to collaborate

MCP provides a standard way for agents to access tools and data

Together, they lay the foundation for truly intelligent operations—where agents not only execute predefined tasks but actively coordinate to solve complex problems.

The companies backing these protocols—Google, Anthropic, and their partners—are betting that the future of enterprise AI lies in interoperability rather than isolation. DevOps teams that embrace this vision early will have a significant advantage as these standards mature.

So start mapping out where agent collaboration could benefit your operations, experiment with these protocols, and prepare for a future where you're orchestrating not just infrastructure, but an intelligent, communicating fleet of AI agents working together to keep your systems running flawlessly.

What's your experience with AI agents in DevOps so far? Are you exploring A2A or MCP implementations? Share your thoughts in the comments!