The fusion of DevOps and AI is no longer just a future trend—it's happening now. Docker's Model Runner is bridging this gap with remarkable simplicity, making local AI deployment accessible to all DevOps practitioners.

- Gourav Shah, Founder, School of DevopsThe Big Idea: AI Infrastructure Simplified

In today's rapidly evolving tech landscape, the line between DevOps and AI/MLOps is blurring. Docker, a tool already familiar to most DevOps engineers, has quietly released a game-changing feature that acts as a perfect on-ramp for those looking to expand their skillset into AI operations: Docker Model Runner.

This new capability allows DevOps practitioners to pull, run, and manage AI models using the same containerization principles they already understand—no AI expertise required. It's like giving a skilled auto mechanic the keys to a spacecraft with familiar controls.

Why Local Model Deployment Matters

Breaking Free from the Cloud Dependency

Cloud-based AI solutions have dominated the landscape with good reason—they're convenient, scalable, and require minimal setup. However, they come with significant drawbacks:

Data Privacy Concerns: Sensitive data sent to third-party APIs creates compliance nightmares

Unpredictable Costs: Pay-per-token models can lead to budget overruns

Network Dependency: Requiring constant internet connectivity creates operational risks

Vendor Lock-in: Building around specific cloud AI APIs creates long-term dependencies

Latency Issues: Round-trip times to cloud services add meaningful delays for real-time applications

Local model deployment addresses all these concerns while offering DevOps teams something they deeply value: control. Like the difference between relying on a taxi service versus having your own vehicle, local AI models give you freedom, predictability, and ownership.

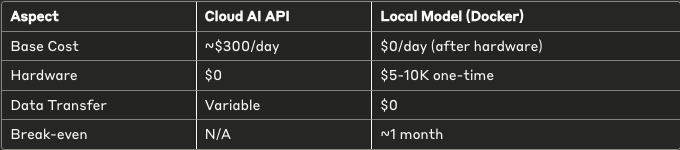

The TCO Advantage

While cloud AI services appear cost-effective initially, the total cost of ownership (TCO) often favors local deployment for production workloads. Consider this simplified comparison for processing 10 million tokens daily:

What Makes Docker Model Runner Unique

Docker Model Runner isn't just another tool—it's a paradigm shift in how DevOps teams can approach AI integration.

The Containerization Advantage

The genius of Docker Model Runner lies in its familiar approach. Rather than creating an entirely new paradigm for AI deployment, it leverages Docker's existing containerization framework that DevOps engineers already know. This means:

Same Workflow: The

docker modelcommands follow the same patterns as traditional DockerConsistent Infrastructure: Models deploy using the same underlying container technology

Existing DevOps Tooling: Monitoring, logging, and scaling tools work seamlessly

It's like introducing electric vehicles to mechanics who already know how to service cars—the core principles remain the same, even if some components differ.

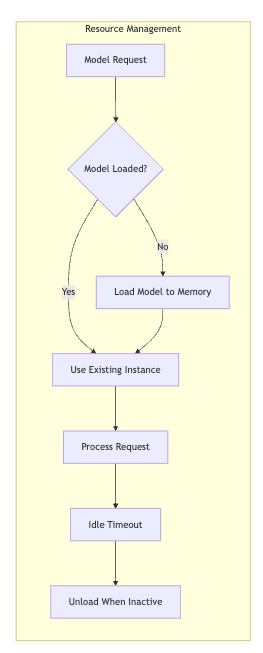

The Secret Sauce: Runtime Resource Management

One of the most elegant aspects of Docker Model Runner is how it handles resource allocation. Unlike traditional approaches that might load models permanently into memory, Docker Model Runner:

Pulls models from Docker Hub the first time they're requested

Stores them locally for future access

Loads them into memory only when needed

Unloads them automatically when idle

This dynamic resource management mirrors how modern cloud-native applications operate, making it instantly familiar to DevOps practitioners. It's similar to how a ride-sharing service only deploys vehicles when needed rather than keeping an entire fleet running constantly.

How Docker Model Runner Works

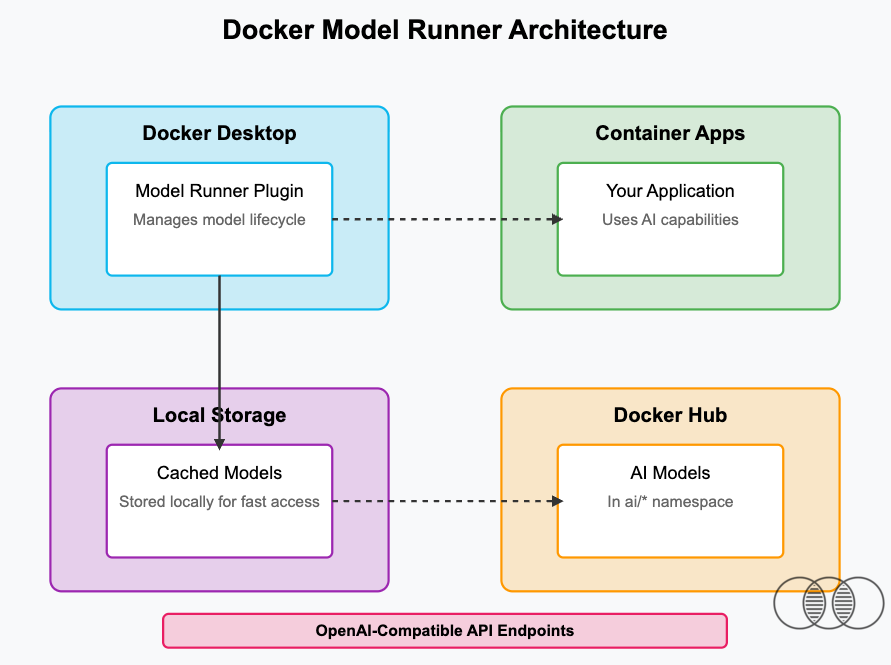

Under the hood, Docker Model Runner operates through a straightforward architecture that will feel intuitive to DevOps engineers.

The Architecture

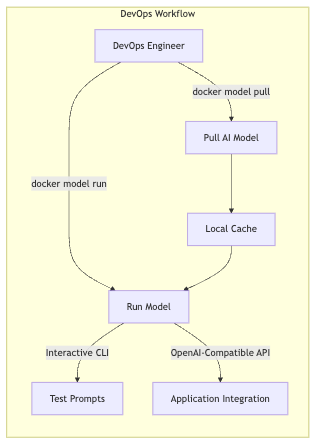

The Workflow

Discovery: Browse available models on Docker Hub's

ainamespaceAcquisition:

docker model pull ai/model-namefetches the modelDeployment:

docker model run ai/model-namestarts the modelInteraction: Either through CLI or API endpoints

Management: List, remove, and update models with familiar Docker commands

This workflow mirrors the traditional Docker container lifecycle that DevOps practitioners already understand. The learning curve is minimal because the mental model remains consistent.

Docker Model Runner vs. Ollama: The DevOps Perspective

Both Docker Model Runner and Ollama aim to simplify local AI model deployment, but their approaches differ significantly, especially from a DevOps practitioner's viewpoint.

Integration with Existing Infrastructure

Where Ollama functions as a standalone tool requiring its own learning curve and integration strategy, Docker Model Runner leverages the existing Docker infrastructure. For DevOps teams, this distinction is crucial—it means no new deployment pipelines, monitoring systems, or operational procedures.

Feature Docker Model Runner Ollama Container Integration Native Requires additional setup Docker Compose Support Built-in Limited Existing CI/CD Compatibility High Moderate Infrastructure as Code Support Complete Partial Learning Curve for DevOps Minimal Moderate

Interactive CLI: DevOps-Friendly Approach

One of Docker Model Runner's standout features is its interactive CLI capabilities. While Ollama was primarily designed with programmers in mind, Docker Model Runner provides:

Interactive chat mode directly in the terminal

One-time prompt execution for quick testing

Seamless integration with shell scripts and automation

Familiar Docker-style command patterns

This means DevOps engineers can quickly validate models, test prompts, and incorporate AI capabilities into their automation workflows without writing a single line of code.

# Quick one-time prompt with Docker Model Runner

docker model run ai/smollm2 "Explain Kubernetes CRDs in a tweet-length response"

# Interactive mode for exploration

docker model run ai/smollm2

> What are the security implications of running containers as root?

> How can I ensure my Docker images are minimally sized?

> /bye

The OpenAI API Compatibility Game-Changer

Perhaps the most significant advantage of Docker Model Runner is its compatibility with the OpenAI API format. This seemingly simple feature has profound implications for DevOps practitioners transitioning to AI/MLOps.

The API Bridge

By implementing OpenAI-compatible endpoints, Docker Model Runner allows DevOps teams to:

Swap Dependencies: Replace OpenAI API calls with local model endpoints without code changes

Test Locally, Deploy Globally: Develop against local models before moving to production

Create Hybrid Architectures: Route sensitive queries to local models while using cloud APIs for general purposes

Future-Proof Applications: Switch between different AI backends as technology evolves

This compatibility is like having a universal adapter for AI—it ensures that investments in current AI integration aren't wasted when moving between providers or deployment strategies.

The Code Remains the Same

For DevOps practitioners managing applications that use AI capabilities, this compatibility means minimal disruption when shifting between deployment strategies:

// Before: OpenAI Cloud API

const response = await fetch('https://api.openai.com/v1/chat/completions', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${OPENAI_API_KEY}`

},

body: JSON.stringify({

model: 'gpt-3.5-turbo',

messages: [{ role: 'user', content: 'Hello world' }]

})

});

// After: Docker Model Runner (local deployment)

const response = await fetch('http://model-runner.docker.internal/engines/v1/chat/completions', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({

model: 'ai/smollm2',

messages: [{ role: 'user', content: 'Hello world' }]

})

});

The minimal change required to switch between cloud and local deployment creates enormous flexibility for DevOps teams, allowing them to optimize for cost, performance, or privacy as needed.

Why DevOps Practitioners Should Care: The MLOps Evolution

For DevOps professionals eyeing the growing AI/MLOps field, Docker Model Runner represents a perfect entry point. Here's why it matters:

The Skill Bridge

The transition from DevOps to MLOps doesn't happen overnight. Docker Model Runner creates a natural progression path:

Start with Familiar Tools: Use Docker commands you already know

Explore AI Capabilities: Interact with models through the CLI

Learn Model Deployment Patterns: Understand how AI models are packaged and distributed

Integrate with Applications: Connect existing applications to local AI endpoints

Build MLOps Pipelines: Gradually add model training, evaluation, and versioning

Each step builds on existing DevOps knowledge while introducing AI concepts organically. It's comparable to how Kubernetes built on Docker knowledge—a natural evolution rather than a complete paradigm shift.

Real-World Applications

The practical applications for DevOps teams are immediate and valuable:

Log Analysis: Deploy local models to analyze system logs and detect anomalies

Infrastructure as Code Generation: Use AI to generate or review Terraform/CloudFormation templates

Documentation Automation: Generate documentation from code or configuration files

Incident Response: Summarize alerts and suggest remediation steps

Cost Optimization: Analyze resource usage patterns and recommend optimizations

These use cases directly enhance existing DevOps responsibilities while building valuable MLOps skills.

Getting Started: A DevOps Guide

For DevOps practitioners looking to explore Docker Model Runner, here's a simplified getting started guide:

Ensure Docker Desktop is Updated: Docker Model Runner is enabled by default in recent versions

Verify Installation: Run

docker model statusto confirm everything is workingPull Your First Model: Try

docker model pull ai/smollm2for a lightweight starter modelInteractive Exploration: Run

docker model run ai/smollm2to start chatting with the modelAPI Integration: Set up a simple application using the OpenAI-compatible endpoints

Key Takeaways

Docker Model Runner represents a pivotal moment for DevOps practitioners looking to expand into AI/MLOps:

Familiar Territory: Leverages existing Docker knowledge for AI model deployment

Operational Control: Enables local AI deployment with the same reliability guarantees as other containerized services

Simplified Experimentation: Interactive CLI makes exploring AI capabilities accessible without coding

API Compatibility: OpenAI-compatible endpoints create flexible deployment options

Career Evolution: Provides a natural bridge from traditional DevOps to AI/MLOps roles

The containerization revolution transformed how we deploy traditional applications. Docker Model Runner is poised to do the same for AI, with DevOps practitioners perfectly positioned to lead this transformation.

As the lines between software development, operations, and AI continue to blur, tools like Docker Model Runner that bridge these domains will become increasingly valuable. For the DevOps practitioner looking toward the future, there's no better time to start exploring this powerful integration of familiar containerization technology with the exciting world of AI models.

About the Author: Gourav Shah is a DevOps consultant and Corporate Trainer specializing in the intersection of containerization, cloud-native architecture, and AI operations. With over 18 years of experience helping organizations modernize their infrastructure, Gourav is now on a mission to help Devops Practitioners get ready with emerging field of AI/MLOps.

Share this post