Goodbye DynamoDB: Terraform Introduces Native S3 State Locking

Simplify Your Infrastructure Management with Terraform's Built-in S3 Locking Mechanism

Hey there,

It's Gourav here. You know how sometimes the most significant changes happen with the least fanfare? Last week, I was updating some infrastructure code for a client when I noticed something that made me do a double-take. After years of setting up the familiar DynamoDB table alongside S3 buckets for state locking, I discovered that Terraform now supports native state locking with S3 backends — no DynamoDB required.

I immediately thought of you and all the other DevOps practitioners who've been jumping through these same hoops for years. This change is like discovering your favorite restaurant now delivers – something you've wanted forever suddenly becomes available, and it fundamentally changes your experience.

Let's dive into what this means for your workflows, your infrastructure, and your sanity.

The Old Way: The Terraform State Management Dance

If you've been in the DevOps trenches for any length of time, you're intimately familiar with this setup:

terraform {

backend "s3" {

bucket = "my-terraform-states"

key = "prod/network/terraform.tfstate"

region = "us-west-2"

dynamodb_table = "terraform-locks"

encrypt = true

}

}

Setting up that DynamoDB table was like having to bring your own chairs to a restaurant. It worked, but it added extra steps that felt unnecessary. It was that last 20% of work that somehow took 80% of the effort, especially when you had to explain to new team members why we needed this additional component.

For those managing multiple environments or working with numerous teams, this meant:

Creating and maintaining additional infrastructure

Writing extra documentation

Troubleshooting random locking issues

Managing another AWS service with its own costs and IAM permissions

Just like how you need to set up CI/CD pipelines before actually deploying any real code, this felt like infrastructure you needed before you could create your actual infrastructure. Meta-infrastructure, if you will.

The New Way: Native S3 State Locking

With Terraform's latest update, the configuration now looks refreshingly simple:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 5.80.0"

}

}

backend "s3" {

bucket = "company-terraform-states"

key = "environments/production/networking/terraform.tfstate"

region = "eu-west-1"

encrypt = true

use_lockfile = true

# Optional additional settings

workspace_key_prefix = "workspaces"

sse_customer_key = null

}

required_version = ">= 1.10.0"

}

provider "aws" {

region = "eu-west-1"

default_tags {

tags = {

Environment = "Production"

ManagedBy = "Terraform"

Team = "Infrastructure"

}

}

}

With this configuration, the essential use_lockfile = true parameter enables Terraform's native state locking capability with S3. I've included some additional optional settings to demonstrate the flexibility you have with this approach. The version constraints are also more flexible, requiring Terraform 1.10.0 or higher and AWS provider 5.80.0 or newer, which ensures compatibility with this feature.

It's like switching from manual deployment to automated CI/CD. The result is the same, but you've eliminated multiple points of failure and simplified your entire workflow.

How It Works: Under the Hood

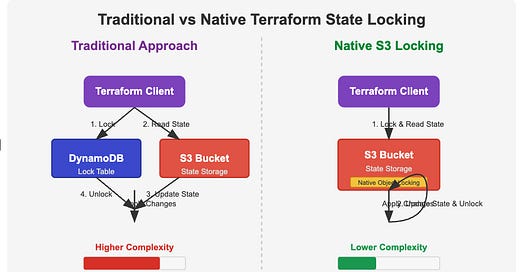

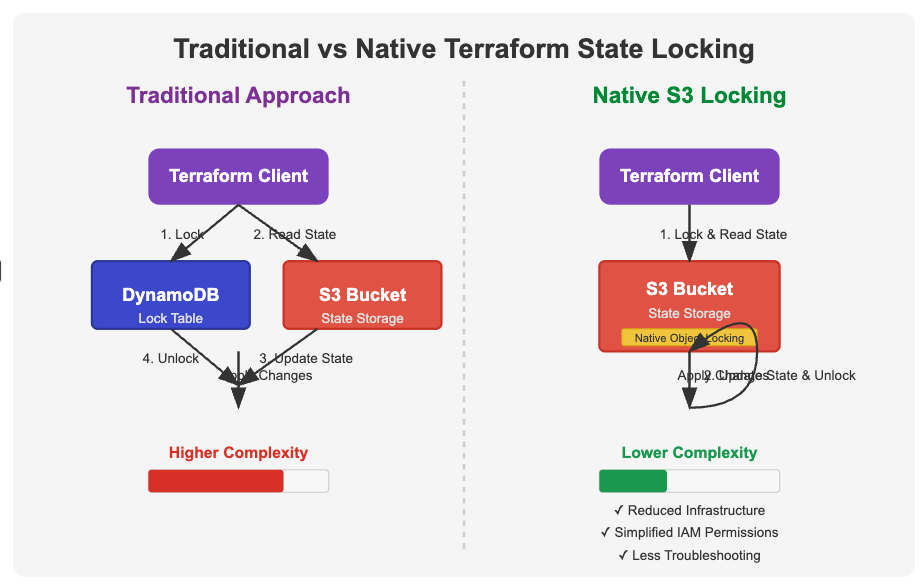

Let me explain what's happening behind the scenes with a diagram:

In the traditional approach, when you run terraform apply, the following happens:

Terraform tries to acquire a lock in the DynamoDB table

If successful, it then reads the state from S3

After applying changes, it updates the state in S3

Finally, it releases the lock in DynamoDB

If anyone else tries to run Terraform against the same state during this process, they'll see an error about the state being locked.

With native S3 locking, the process is streamlined:

Terraform leverages S3's built-in object versioning and conditional writes

It uses ETag checking and conditional PutObject operations

This creates an atomic "check-and-set" pattern directly with the S3 object

No separate DynamoDB service is involved

This approach is similar to how Git handles merge conflicts - it's all built into the same system that stores your data, rather than requiring an external service to coordinate.

Benefits for DevOps Teams

For you as a DevOps practitioner, this change offers several immediate benefits:

1. Simplified Infrastructure

Remember setting up a Kubernetes cluster and then realizing you needed to set up a whole monitoring stack before it was production-ready? That's how it felt adding DynamoDB to your Terraform setup. Now, it's one less component to worry about - like having monitoring built into your K8s cluster.

2. Cost Reduction

Every AWS service comes with its own pricing model. While DynamoDB isn't typically expensive for state locking (it's mostly within the free tier for small to medium teams), it's still a line item that you can now eliminate. It's like finding out your favorite SaaS tool now includes a feature you were paying extra for.

3. Reduced IAM Complexity

Your Terraform service accounts or users no longer need DynamoDB permissions. That's one less policy to manage, one less potential security issue to worry about. If your organization practices least-privilege access (as it should), this is a meaningful simplification.

4. Less Troubleshooting

How many times have you had to debug a "state is locked" issue only to find that the DynamoDB permissions were misconfigured? Or dealt with a stuck lock that required manual intervention? Native S3 locking reduces these failure points considerably.

Migration: Moving to Native Locking

If you're excited to simplify your Terraform workflows (and who wouldn't be?), here's how to migrate:

Plan your migration - Choose a time when active development is minimal

Update your backend configuration - Remove the

dynamodb_tableattributeRun

terraform init- Terraform will prompt you about the backend changeVerify locking works - Try running concurrent operations to ensure locking functions

Remember to update your documentation and inform your team about this change. Old habits die hard, and you'll likely find team members still creating DynamoDB tables out of muscle memory.

A word of caution: If you're using multiple tools that interact with your Terraform state (like Terragrunt or custom scripts), verify they support native S3 locking before migrating.

Real-world Implications

Let me share a quick story from my own experience. Last month, I was helping a client migrate their infrastructure to a new AWS account. Due to a misconfiguration, the Terraform service account had read access to DynamoDB but not write access. This resulted in a frustrating situation where Terraform could read the state but couldn't acquire locks.

The team spent nearly two hours debugging permission issues before identifying the problem. With native S3 locking, this entire class of problems disappears.

Another client with multiple teams working on shared infrastructure frequently ran into race conditions where one team would override another's changes. Adding DynamoDB locking helped, but it was complex to set up correctly across all their environments. Native S3 locking would have saved them days of integration work.

Key Takeaways for Your DevOps Journey

As we wrap up, here are the essential points to remember:

Simplify your infrastructure - Remove DynamoDB tables from your Terraform setup when possible

Update your templates - If you have starter templates or modules that include DynamoDB locking, update them

Review IAM policies - Remove unnecessary DynamoDB permissions from your Terraform roles

Document the change - Make sure your team knows about this simplification

This change from Terraform represents something I've always advocated for: eliminating complexity when it doesn't add value. Just like how we moved from managing individual servers to infrastructure as code, each simplification in our toolchain helps us focus more on delivering business value and less on maintaining meta-infrastructure.

What other DevOps tools or practices do you think need simplification? Drop a comment below - I'd love to continue this conversation and potentially tackle those topics in future newsletters.

Until next time,

Gourav

P.S. If you found this useful, hit the "Like" button and share with a colleague who's still setting up DynamoDB tables for their Terraform states. And if you're implementing this change, I'd love to hear how it goes - reply/comment with your experience!