The Dawn of Agentic DevOps: Understanding Model Context Protocol (MCP)

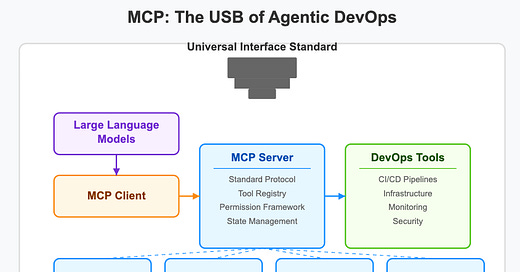

Introduction: The Universal Serial Bus of AI

Remember the days before USB? A chaotic landscape of computer peripherals with proprietary connectors—parallel ports, serial ports, PS/2 connectors, and custom interfaces—each requiring specific drivers and configurations. Connecting a new device was often a frustrating experience of compatibility issues, driver installations, and sometimes even hardware conflicts.

Then, in 1996, the Universal Serial Bus (USB) specification arrived. Its promise was revolutionary: a single standardized interface that would allow any compatible device to connect to any computer, with plug-and-play functionality that "just worked." Today, we barely think about connectivity—we expect our keyboards, mice, cameras, storage devices, and countless other peripherals to function seamlessly when plugged in.

The DevOps world in 2025 is experiencing a similar revolutionary moment with the emergence of Model Context Protocol (MCP). Just as USB standardized hardware connections, MCP is standardizing how AI agents interact with DevOps tools and environments. This standardization promises to usher in a new era of "Agentic DevOps," where AI-powered agents can seamlessly interact with any tool in your technology stack.

For DevOps professionals seeking to remain relevant and thrive in this AI-dominated future, understanding MCP isn't just advantageous—it's essential. This essay explores how MCP works and how it will transform the DevOps landscape, providing a roadmap for practitioners to evolve their skills and embrace the agentic future.

The Problem: Tool Fragmentation in DevOps and AI

The Pre-MCP DevOps Landscape

Modern DevOps environments are characterized by an explosion of specialized tools:

Container orchestration platforms like Kubernetes

CI/CD pipelines across GitHub Actions, Jenkins, and GitLab

Infrastructure as Code tools like Terraform and CloudFormation

Monitoring solutions from Prometheus to Datadog

Cloud provider-specific services across AWS, Azure, and GCP

Each tool has its own API, authentication methods, configuration formats, and operational models. DevOps engineers spend years mastering these interfaces, writing custom integrations, and building automation scripts to tie these disparate systems together.

The AI Integration Challenge

As Large Language Models (LLMs) have grown more capable, they've demonstrated remarkable potential for automating DevOps tasks—from writing infrastructure code to debugging applications and optimizing configurations. However, each AI-to-tool integration has required custom development:

Specialized prompts to guide the AI in using each tool

Custom code to translate AI outputs into tool-specific formats

Bespoke permission and safety mechanisms for each integration

Isolated memory and state management for each workflow

This fragmentation has limited AI adoption in DevOps to isolated use cases rather than enabling comprehensive, agentic automation. The situation mirrors the pre-USB era, with each integration requiring specialized knowledge and custom development.

Understanding Model Context Protocol (MCP)

What is MCP?

Model Context Protocol (MCP) is an emerging standard that defines how AI systems exchange contextual information with their operating environments. Unlike traditional APIs that merely pass structured data, MCP creates a bidirectional relationship between AI models and the systems they interact with, enabling AI agents to:

Gain awareness of available tools, resources, and constraints

Receive real-time feedback about system states and operation outcomes

Maintain contextual memory across complex, multi-step operations

Navigate action spaces with appropriate permissions and safeguards

At its core, MCP addresses a fundamental challenge: how to enable AI systems to operate effectively in complex technical environments without hard-coding every possible interaction path or requiring constant human intervention. Just as USB standardized hardware connections, MCP aims to standardize AI-DevOps tool interactions.

The Technical Architecture of MCP

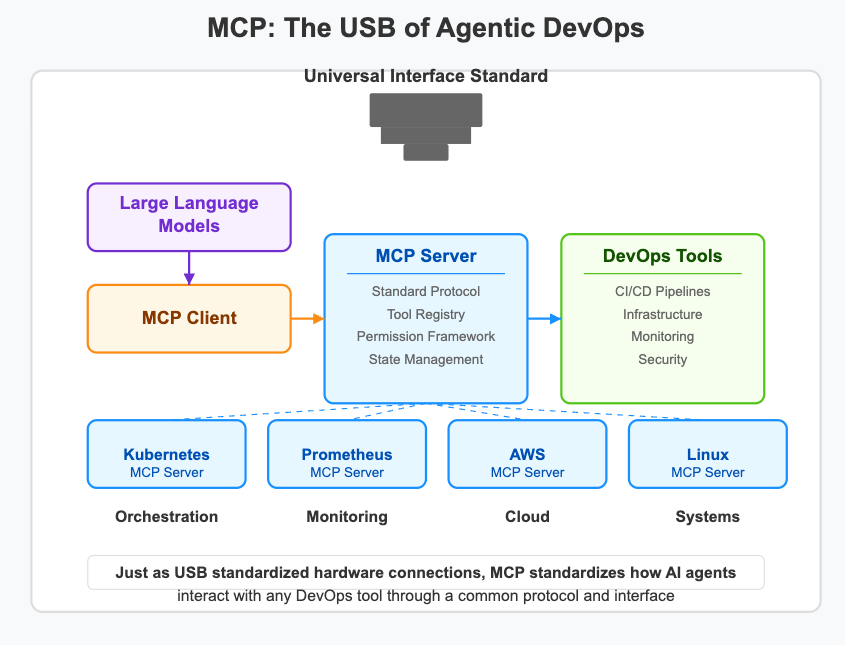

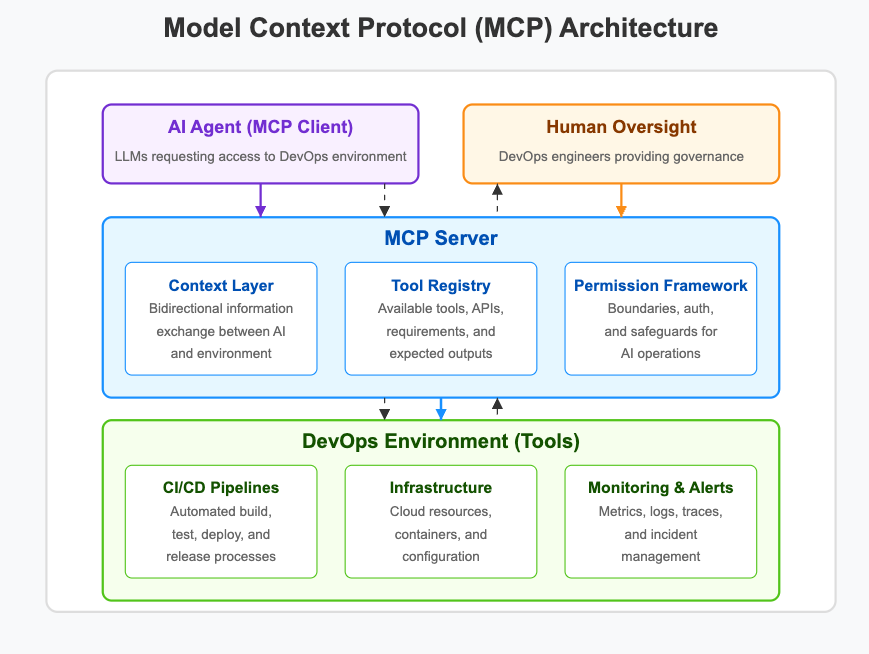

MCP operates through a layered architecture with clear separation between clients, servers, and tools:

AI Agents (MCP Clients): LLMs like Claude, GPT-4, and Gemini that request access to environments and tools

MCP Servers: The middleware that implements the protocol, handling:

Context Layer: Manages the bidirectional flow of information between the AI model and execution environment

Tool Registry: Documents available tools, their capabilities, input requirements, and expected outputs

State Manager: Tracks the current state of systems and resources being manipulated

Permission Framework: Enforces boundaries on what actions the AI can take

Memory System: Maintains operational history and learned patterns across sessions

DevOps Tools: The actual infrastructure, pipelines, and monitoring systems that the AI agents interact with

This architecture allows AI agents to operate with both autonomy and safety in complex DevOps environments, whether performing routine maintenance tasks or responding to critical incidents.

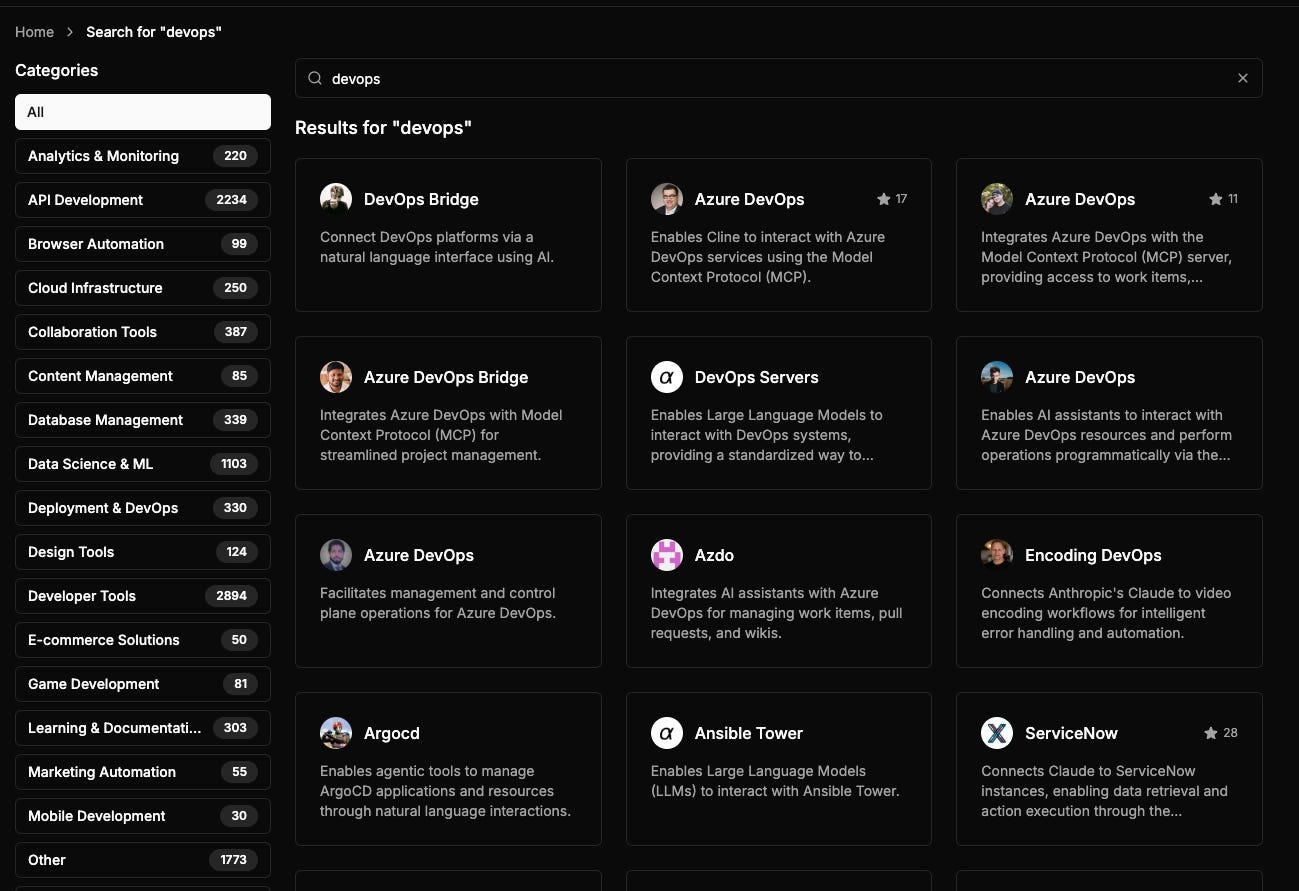

The Emerging DevOps MCP Ecosystem

A vibrant ecosystem is forming around the MCP standard specifically for DevOps, with various organizations building DevOps-focused MCP server implementations:

Kubernetes MCP Servers: Enabling AI agents to orchestrate containerized applications, manage deployments, scale resources, and handle configuration management through a standardized interface

Prometheus MCP Servers: Allowing AI agents to interact with monitoring systems, set up alerts, analyze metrics, and proactively identify performance issues

Example : https://github.com/kaznak/alertmanager-mcp

Cloud Provider MCP Servers: AWS, Azure, and GCP implementations that provide standardized access to cloud resources, serverless functions, and managed services

Linux MCP Servers: System-level implementations that enable AI agents to manage operating system tasks, user accounts, configuration files, and system services

CI/CD MCP Servers: Specialized servers for Jenkins, GitHub Actions, GitLab CI, and other pipeline tools, allowing AI agents to design and optimize delivery workflows

You could get a list of awesome MCP Servers for Devops here : https://github.com/agenticdevops/awesome-devops-mcp

This diverse ecosystem ensures that DevOps teams can implement MCP across their entire technology stack while maintaining consistent interaction patterns for AI agents. Just as USB allowed any device to connect to any computer through a standardized interface, MCP enables any AI agent to interact with any DevOps tool through a common protocol - regardless of the specific LLM or tool implementation.

The standardization provided by MCP brings several key benefits:

Pluggability: Replace components without reconfiguring the entire system

Interoperability: Mix and match different LLMs with various DevOps tools

Reduced Training Costs: Train DevOps practitioners on one interaction model

Future-Proofing: New AI advances can be integrated without disrupting existing systems

This approach prevents vendor lock-in and encourages innovation through competition, allowing DevOps teams to adopt AI technologies incrementally without committing to a single ecosystem.

The Evolution to Agentic DevOps

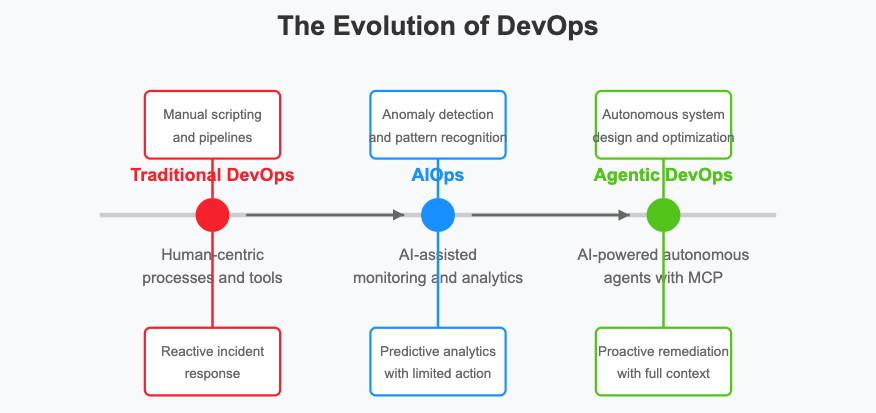

Traditional DevOps: The Human-Centered Approach

Traditional DevOps emerged as a methodology to break down silos between development and operations teams. It relies heavily on:

Manual creation and maintenance of CI/CD pipelines

Human-written scripts for automation

Reactive monitoring and incident response

Extensive documentation for knowledge transfer

While revolutionary in its time, traditional DevOps struggles with the scale and complexity of modern cloud-native environments.

AIOps: AI-Assisted Operations

AIOps represented the first wave of AI integration into DevOps, focusing primarily on:

Automated anomaly detection in monitoring data

Predictive analytics for capacity planning

Intelligent alerting to reduce alert fatigue

Pattern recognition for root cause analysis

While valuable, AIOps tools typically operate as isolated solutions rather than cohesive systems, and they generally lack agency—the ability to act independently based on their findings.

Agentic DevOps: The MCP Revolution

Agentic DevOps, powered by MCP, represents a paradigm shift. Here, AI agents don't just analyze and recommend—they act. With proper authorization and oversight, these agents can:

Autonomously design, implement, and maintain CI/CD pipelines

Dynamically adjust infrastructure based on real-time requirements

Predict, detect, and remediate incidents with minimal human intervention

Continuously refactor and optimize code and configurations

Synthesize and apply knowledge across the entire development lifecycle

The key difference is that MCP enables these agents to understand the full context of the DevOps environment, make informed decisions, and take appropriate actions while maintaining clear communication with human operators.

Practical Applications of MCP in DevOps

Intelligent Infrastructure as Code (IaC)

MCP-enabled agents can revolutionize infrastructure management by:

Generating infrastructure code based on high-level requirements

Continuously optimizing infrastructure configurations for cost and performance

Identifying and resolving infrastructure drift

Creating self-healing infrastructure that responds to failures without human intervention

This eliminates much of the manual effort traditionally associated with IaC while improving reliability and efficiency.

Autonomous CI/CD Pipeline Management

With MCP, AI agents can:

Design optimal CI/CD pipelines based on project requirements

Dynamically adjust pipeline configurations as codebases evolve

Identify and resolve pipeline bottlenecks

Implement advanced deployment strategies with automatic rollback capabilities

This reduces the cognitive load on DevOps engineers while accelerating delivery cycles.

Predictive Incident Management

MCP-powered agents excel at incident management through:

Proactive identification of potential issues before they impact users

Automated diagnosis and triage of incidents

Context-aware remediation actions

Post-incident analysis and system hardening

This shifts incident management from a reactive to a proactive discipline.

Continuous Documentation and Knowledge Management

MCP enables AI systems to:

Automatically generate and update technical documentation

Create and maintain runbooks for common procedures

Document system architecture and design decisions

Capture and share institutional knowledge

This addresses one of the persistent challenges in DevOps: keeping documentation accurate and up-to-date.

Implementing MCP in Your DevOps Practice

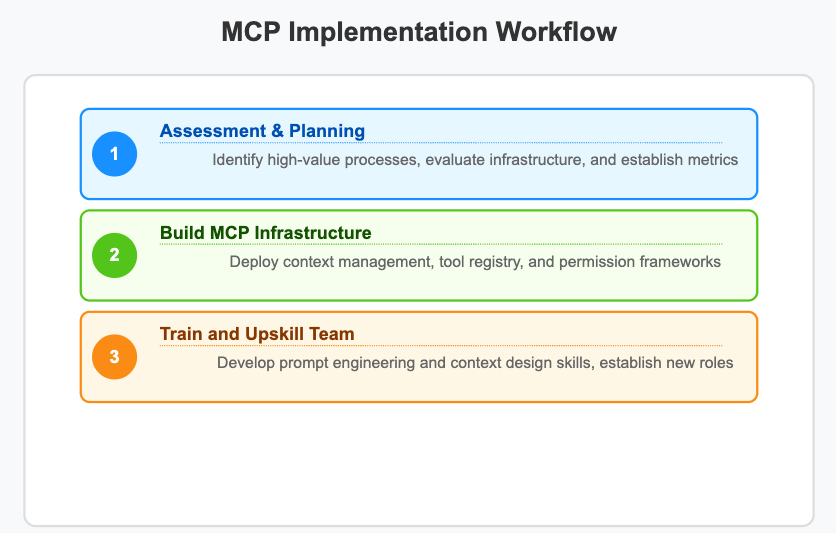

Assessment and Planning

Begin by:

Identifying high-value, low-risk processes for initial MCP implementation

Evaluating existing infrastructure for MCP compatibility

Determining necessary security and governance controls

Developing clear metrics for measuring success

This creates a foundation for successful adoption.

Selecting or Building Your MCP Implementation

Choose your implementation path:

Adopt existing MCP servers for your critical DevOps tools (Kubernetes, AWS, etc.)

Contribute to open-source MCP implementations for your tool stack

Build custom connectors for proprietary or internal systems

Establish governance frameworks for AI operations

This infrastructure provides the scaffolding for agentic operations.

Training and Upskilling Your Team

Prepare your team by:

Developing MCP-specific skills like prompt engineering and context design

Training on effective human-AI collaboration

Establishing new roles such as AI Operations Engineers and Prompt Architects

Creating feedback loops for continuous improvement

This ensures your team can effectively work alongside AI agents.

Contributing to the MCP Ecosystem

Scale your implementation by:

Sharing innovations and best practices with the wider community

Participating in standards discussions to shape MCP's evolution

Building and open-sourcing tool connectors for underserved areas

Documenting your organization's journey for others to learn from

This approach helps mature the ecosystem while establishing your organization as a leader in the field.

Challenges and Considerations

Security and Governance

MCP implementation requires careful attention to:

Robust authentication and authorization frameworks

Comprehensive audit trails for AI-initiated actions

Clear boundaries for autonomous operations

Regular security assessments of the MCP infrastructure

This ensures that increased automation doesn't come at the cost of security.

Human-AI Collaboration Models

Effective implementation depends on:

Clearly defined roles and responsibilities for humans and AI agents

Intuitive interfaces for human oversight and intervention

Transparent communication of AI reasoning and planned actions

Mechanisms for humans to provide feedback and course corrections

This creates a productive partnership rather than an adversarial relationship.

Ethical Considerations

Organizations must address:

The impact of automation on DevOps jobs and team structures

Responsible limits on AI agency and autonomy

Transparency in AI decision-making processes

Equity in how AI benefits are distributed across the organization

This ensures that technological advancement aligns with organizational values.

The Future of DevOps Careers in an MCP World

Emerging Roles and Opportunities

As MCP reshapes DevOps, new roles are emerging:

AI Operations Engineers: Specialists who design and maintain MCP infrastructures

Prompt Architects: Experts in crafting effective contexts and instructions for AI agents

DevOps Governors: Professionals who establish and enforce boundaries for AI operations

Automation Strategists: Leaders who identify high-value opportunities for AI implementation

These roles require a blend of traditional DevOps knowledge and AI-specific skills.

Essential Skills for the MCP Era

To thrive in this new landscape, DevOps professionals should develop:

Strong foundations in prompt engineering and context design

Understanding of large language model capabilities and limitations

Expertise in designing effective human oversight mechanisms

Skills in interpreting and validating AI-generated outputs

Ability to design clear boundaries and guardrails for autonomous systems

These skills complement rather than replace traditional DevOps expertise.

Career Transition Strategies

For those looking to evolve their careers:

Start with small-scale MCP experiments in personal or non-critical projects

Pursue formal education in AI fundamentals and LLM operations

Participate in open-source MCP implementations and communities

Develop expertise in a specific domain of MCP application

Position yourself as a bridge between traditional DevOps teams and AI capabilities

This creates a path for gradual, sustainable career evolution.

Conclusion: The USB Moment for DevOps

The introduction of USB transformed how we connect physical devices by establishing a universal standard that "just works." MCP is poised to deliver a similar transformation for DevOps—creating a universal standard for how AI agents interact with our tools and environments.

Just as USB didn't eliminate the need for hardware engineers but changed the nature of their work, MCP won't eliminate DevOps professionals. Instead, it will elevate their role from manual operators to strategic architects and governors of AI-powered systems. The most successful DevOps professionals of tomorrow will be those who embrace this shift, developing the skills to design, implement, and manage MCP infrastructures while providing the human judgment and oversight that remain irreplaceable.

For those willing to adapt and grow, MCP opens new frontiers of opportunity. By understanding and implementing this new standard, today's DevOps practitioners can ensure they remain at the forefront of technological innovation, shaping the future rather than being shaped by it.

The question isn't whether AI will transform DevOps—it's who will guide that transformation. With the right knowledge and skills, that leader can be you.